Seeing What's Next Part II, Undershot Customers

As Clayton describes, incumbent players (EMC, IBM, etc.) are strongly motivated to add new features that they can charge these undershot customers for, especially in a situation like today where traditional block arrays are rapidly becoming commodities. The problem is that sometimes, what he terms a 'radical sustaining innovation' is required. This is when a major re-architecture that changes the whole system from end to end is required to meet new customer needs. An example is when AT&T changed it's whole network from analog to digital in the 1970's. They just couldn't move forward and add significant new value without making that end-to-end change.

That's where storage is today. I've repeated that message in this blog but will say it again because Clayton makes this such an important point for understanding innovation. The block interface which is the primary interface for almost all enterprise class storage is over thirty years old now. The basic assumptions the block interface was based on don't apply anymore at these undershot customers. Block-based filesystems assumed storage was a handful of disks owned by the application server. It assumed the storage devices had no intelligence so it disaggregated information into meaningless blocks. A block storage array has no inherent way to know anything about the information it is given so it's extremely limited in it's ability manage any information lifecycle, or comply with information laws, or anything else involving the information. The problem is that now that information has moved out of the application server, away from the filesystem or the database application and now that it lives in the networked storage server, the information MUST be managed there. This is why an object-based interface such as NFS, CIFS, or OSD which allows the storage server to understand the information and its properties is essential. So, the question is, who can create this 'radical sustaining innovation' with it's changes up and down the stack from databases and filesystems down to the storage servers?

If we were back in the early 1980s this would be easy. One of the vertically integrated companies such as IBM or DEC would get their architects from each layer of the stack together, create a new set of proprietary interfaces, and develop a new end-to-end solution. Then, if they did their job right, the undershot customers would be happy to buy into this new proprietary solution because it does a better job of solving the four problems above. The problem today is that many of these companies either don't exist anymore, or they've lost the ability for this radical innovation after twenty years in our layered, standards-based industry.

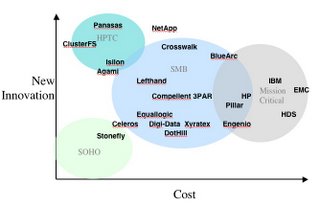

Who could pull off this radical sustaining innovation spanning both the storage and the application server? Clayton recommends looking at various companies strengths, past records, and their resources, priorities and values to attempt to identify who the winners might be. Here's my assessment of the possible candidates.

IBM

IBM is probably the farthest along this transition with it's StorageTank architecture. StorageTank is a new architecture for both filesystems and storage arrays that do just what I described above - use an object protocol to tell the storage array about the information so it can be managed and tracked. What I don't know is how successful it has been or how committed IBM is to this architecture. Twenty years ago it was in IBM's DNA to invest in a major integrated architecture like this. Whether that's still the case, I don't know. Another question is how well the array stands up as block array in it's own right? Datacenters are heterogeneous. It's fine to implement enhanced, unique functions when working with an IBM app server but the array must do basic block to support the non-IBM servers as well. Will customers buy StorageTank arrays for this? I don't know.

Microsoft

Microsoft is a strong candidate to be one of the winners here. They have a track record of doing interdependent, proprietary architectures. They did it with their web-services architecture. They are moving into the storage server with their Windows Storage Server (WSS) - a customized version of the Windows OS designed to be used as the embedded OS in a storage server. Although I don't know specifics, I would bet they are already planning CIFS enhancements that will only work between WSS and Windows clients. Another strength of Microsoft is they understand that, in the end, this is about providing information management services to the applications and gaining support of the developers to use those services. As storage shifts from standard block 'bit buckets' to true information management devices this application capture becomes more important. This is not part of the DNA of most storage companies. Finally, as we all know, they are willing to take the long-term perspective and keep working at complex new technology like this until they get it right. On the flip side, these undershot customers tend to be the high-end mission critical datacenter managers who may not trust Microsoft to store and manage their data.

EMC

EMC is acting like they're moving in this direction, albeit in a stealth way. They have a growing collection of software in the host stack including Powerpath (with Legato additions) and VMWare. They also have a track record of creating and using unique interfaces so it would not be out of character to start tying their host software to their arrays with proprietary interfaces. What they don't have though is a filesystem or, to my knowledge, an object API used by any databases. They also don't have much experience at the filesystem level. They could build a strong value proposition for unstructured data if they acquired the Veritas Foundation Suite from Symantec. An agreement with Oracle on an object API, similar to what Netapp did with the DAFS API would enable a strategy for structured data.

Oracle

Oracle is a trusted supplier of complex software for managing information and they have been increasing their push down the stack. They have always bypassed the filesystem and now with Oracle Disk Manager, they are doing their own volume management. Recent announcements relative to Linux indicate they might start bundling Linux so they don't need a third party OS. With the move to scaleable rack servers and the growth of networked storage, they must be running into the problem that it's hard to fully manage the information when it resides down on the storage server. This explains Larry's investment in Pillar. My prediction is that once Pillar gains some installed-base in datacenters as a basic block/NAS array, then we will see Oracle-specific enhancements to NAS that only work with the Pillar arrays.

Linux and open development

Clayton groups innovation into two types. One type is based on solutions built from modular components designed to standard interfaces. For example a Unix application developed to the standard Unix API running on an OS that uses standard SCSI block storage. Here innovation happens within components of the system and customers get to pick the best component to build their solution. The second type is systems built from proprietary, interdependent components such as an application running on IBM's MVS OS which in-turn uses a proprietary interface to IBM storage. Because standard interfaces take time to form, the proprietary systems have the advantage when it comes to addressing the latest customer problems. When new problems can't be solved by the old standard interfaces, it's the proprietary system vendors who will lead the way in developing the best new solutions.

What Clayton doesn't factor in however, is the situation in the computing industry today where we have open source and open community development. A board member of the Open Source Foundation once explained to me that the main reason to open source something is not to get lots of free labor contributing to your product. The main reason is to establish it as a standard. For example, Apache became the de-facto standard for how to do web serving. This is happening today with NFS enhancements such as NFS over RDMA, pNFS, and V4++. They first get implemented as open source and others look to those implementations as the example. Because Linux is used as both an application server AND as an embedded OS in storage servers, both sides of new proprietary interfaces get developed in the open community and can quickly become the de-facto standard. This is what I love most about Linux and open source. Not only is it leading so much innovation within software components, but when the interface becomes the bottleneck to innovation, the community invents a new interface and implements both sides making that the new standard.